Welcome back to the “THIS is” blog series! This month’s installment goes back to the basics of Making the Shift by looking at one of the fundamentals of our research design: the Randomized Controlled Trial.

In developing Making the Shift (MtS), a primary objective has always been to establish a base of sound, scientific evidence for prevention and Housing First for Youth (HF4Y) interventions. We’ve certainly talked about this before! But this evidence base is so fundamental to MtS that it warrants a deeper discussion – in my opinion, anyway. And since I’m the one writing this blog series, I’ve decided to take you all along with me on this exploration of one of the more nuanced and controversial aspects of our work. It’ll be fun (read: informative), I promise!

What is a Randomized Controlled Trial?

Now that I’ve piqued your curiosity with those buzzwords, let’s talk for a minute about Randomized Controlled Trials. Wait, come back! Known among the research community as “RCT,” Randomized Controlled Trial is simply a formal term for a study in which participants are randomly divided into groups of comparable size and defining characteristics. One group is known as the “control” or “treatment as usual” group, which is the standard against which everything else is compared. The other group(s) is the “intervention” in which participants are involved in the field testing of a theory, approach, idea, or condition. Having distinct groups of participants allows us to directly compare participants’ experiences, both individually and as groups, which is enormously helpful in assessing whether or not the proposed intervention had an effect compared to the “treatment as usual” and what exactly the effect was (as it could be positive or negative). Another large-scale example of a RCT that you have probably heard of, currently underway, is the Government of Ontario’s Basic Income Pilot.

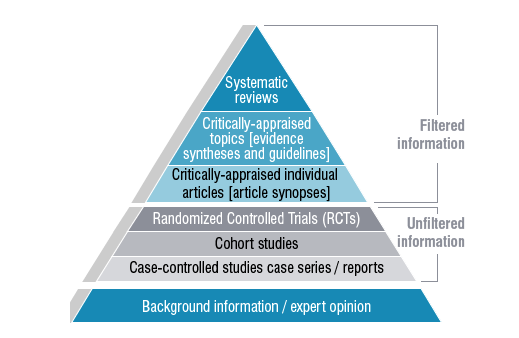

Within some research communities, the RCT is regarded as a “gold standard.” The side diagram offers one illustration on how, for some, RCTs measure up to other research methodologies, with the most rigorous options situated near the top and anecdotal evidence comprising the base. From the RCTs’ position in the middle of the pyramid, we can be assured that there is a balance between rigour and flexibility, which is especially important when working with vulnerable populations such as youth.

So, from a research standpoint, it’s all good – you get controlled data that you can compare and cross-compare. And further, the study can be developed as a mixed methods research design. This kind of design – which we are using for HF4Y – relies on both quantitative and qualitative data to provide a comprehensive idea of how the study is going.

Busting RCT Myths

There are many opinions out there on the merits and demerits of the RCT design. This is common to any approach to research; as virtually any researcher will tell you, there is no official “right” or “wrong” research methodology. Differing perspectives are to be expected, with specific attention to the subject matter under investigation. However, there are a number of concerns about RCTs, particularly those involving youth, so I thought it would be best to address some of them directly:

|

Concern |

Actuality |

|

RCTs withhold resources from participants in the control group |

Participants who are randomized into the “treatment as usual” group receive the services that already exist in the community. Access to service is not in any way withheld from participants. It helps to think of what the participants would do if the RCT didn’t exist – what other resources and services would they be directed to? That’s exactly what the “treatment as usual” group receives. |

|

RCTs are not suitable for youth |

There are many other examples of successful RCTs working with young participants. While it’s true that most RCTs are designed for adult participants, RCTs can also be appropriate involving young participants, dependent on their own informed consent – meaning each participant is able to fully understand the scope of the research, what they can expect to happen, the potential risks and rewards of participating, and their role in the study before signing up. The condition under study should, of course, be developmentally appropriate and ethically sound. |

|

RCTs trap participants in interventions they may not want to participate in |

Participants in either group are able to withdraw their involvement from a RCT study at any time. They are similarly able to refuse to answer any questions, and withdraw their data and previous input from being used in the study. Of course, participants are encouraged to participate through the entire process but are not obligated to do so. For any time given to research participation, young people receive compensation – even if they choose to withdraw their data or stop participating. |

|

RCTs are unfair |

RCTs are regarded in the research community as being one of the fairest methods of allocating new and limited services/resources by randomly dividing participants into groups; participants have an equal chance of being assigned to any group. The randomization process removes all elements of human bias, as the decision is made independent of any emotional factors. |

RCT Meets HF4Y

At this point, you might be asking yourself why MtS is using a RCT design, which is absolutely a fair question (and one the top questions we get asked, in fact!). The most straightforward answer is that the At Home/Chez Soi (AH/CS) study on Housing First used the RCT research design; people might not realize that MtS was created as a direct successor to AH/CS, and that MtS is running a mini AH/CS on HF4Y. This is a direct response to the findings from AH/CS, specifically: “We suggest considering modifications of ‘Housing First’ to maintain fidelity to core principles while better meeting the needs of youth”.[1] So, it follows that, like AH/CS, MtS is also implementing a RCT study.

As mentioned, the RCT design is being used to assess the HF4Y intervention for MtS. Youth who are participating in the Ottawa and Toronto HF4Y projects have been randomized into either the “intervention” or “treatment as usual” group, with researchers closely monitoring their outcomes as groups and individuals. In the end, the goal is to understand and support an approach that promotes better outcomes for youth.

Takeaways

The key message to bear in mind is that nothing is guaranteed with the RCT process; and it is indeed a process of evaluating whether or not a proposed intervention works, not an established solution. This is a crucial distinction to make. People might look at a RCT study and think, “Here is an intervention that we know works and it’s cruel to keep it from some participants; why are we doing this?”. I can absolutely sympathize with that perspective, and the concern at its core, but the truth is that we really need strong data to back up our hypotheses.

In the case of MtS, we need to ensure that we are supporting the best option for youth. Strong data establishes “proof of concept” and the aforementioned evidence base that will inform policy and practice shifts concerning youth homelessness. Rigorous research and evaluation techniques – including the RCT design – are how we will get there.

[1] Kozloff et al., (2016) At Home / Chez Soi

Photo credit: University of Canberra Library. Available here

The “THIS is” blog series is a monthly look into the concepts and ideas at the heart of the Making the Shift Youth Homelessness Social Innovation Lab project. This blog is the sixth installment in the blog series; click to read the first, second, third, fourth, and fifth installments.